Are the 13 Keys to the White House Worthless?

Allan Lichtman’s “13 Keys” election prediction system has a disputed track record and serious flaws. But it might still be worth learning about. A politics update by Jacob Cohen (@Conflux).

A common misconception I hear, when I tell people I work in election forecasting, is that Allan Lichtman’s “13 Keys to the White House” mean anything at all.

“But I watched a video in the New York Times about it,” you might say. Or: “Didn’t Professor Lichtman tweet that Nate Silver doesn’t have the faintest idea how to turn the keys?” Or: “Lichtman says the keys have been correct in every election since 1984. Were you even alive in 1984?”

Grudgingly, I admit that I wasn’t. And I wasn’t even alive in 2000, the year Gore won.

…wait, he didn’t? But it says in the Amazon blurb for Lichtman’s 2000 book about the Keys that “readers who want to know why Al Gore will be the next president should not miss this book.”

The Fine Print

Fine, although Lichtman glossed over it, he did technically say that his system predicted the popular vote, a fact made clear in the blurb for his 2008 edition.1 But wait. When I check out the 2024 blurb, it says Lichtman was “one of the few pundits who had correctly predicted the outcome” — namely, that Trump would win the popular vote.

…hmmm, he said he “switched from predicting the popular vote winner to the Electoral College winner because of a major divergence in recent years between the two vote tallies”? Without changing any of his methodology?

Seems like he doesn’t want to admit he’s wrong.

Perhaps, like 60.5% of those who answered this poll, you’re dismissive.

Or perhaps, like 31.6%, you haven’t really heard of the 13 Keys!

So I think it’s time to go into more depth. Is Lichtman’s model really a total fraud, or can we learn anything from it? (Spoiler: It’s mostly a fraud, but it’s still interesting, and teaches us about both political science and statistics.)

To start, after the cut, I’ll play keys’ advocate and explain how the model works. Then I’ll dive deeper and analyze whether we can learn anything from the model.

Allan J. Lichtman is a Distinguished Professor of History at American University. (This contrasts with his Twitter-feud rival Nate Silver, who he accused of having “no academic credentials.”) Way back in 1981, he came to a bold conclusion about American presidential elections:

All this campaigning and polling that everyone cared so much about — it didn’t matter.

Instead, in Lichtman’s view, every election is a referendum on the party currently in power.

Did they achieve an important foreign policy victory? That’ll help. Did they face a primary challenger? That’ll hurt. And so on. Polls might bounce up and down as voters react to the latest campaign soundbites, but once they fill out their ballots, it’s down to the meat and potatoes. (This gives Lichtman’s approach more power than Silver’s polling-based model: he can predict elections years out, before polls have much predictive power at all.)

The Forging of the Keys

Ultimately, along with earthquake scientist Vladimir Keilis-Borok, he identified 13 factors — the infamous 13 Keys to the White House — that influence elections. To win, the party in power (the “incumbent”) must secure at least 8 keys. Otherwise, they’ll lose (to the “challenger”).

Party mandate: After the midterm elections, the incumbent party holds more seats in the U.S. House of Representatives than after the previous midterm elections.

No primary contest: There is no serious contest for the incumbent party nomination.

Incumbent seeking re-election: The incumbent party candidate is the sitting president.

No third party: There is no significant third party or independent campaign.

Strong short-term economy: The economy is not in recession during the election campaign.

Strong long-term economy: Real per capita economic growth during the term equals or exceeds mean growth during the previous two terms.

Major policy change: The incumbent administration effects major changes in national policy.

No social unrest: There is no sustained social unrest during the term.

No scandal: The incumbent administration is untainted by major scandal.

No foreign or military failure: The incumbent administration suffers no major failure in foreign or military affairs.

Major foreign or military success: The incumbent administration achieves a major success in foreign or military affairs.

Charismatic incumbent: The incumbent party candidate is charismatic or a national hero.

Uncharismatic challenger: The challenging party candidate is not charismatic or a national hero.

I think the list format gives the 13 Keys its star power. Who will score the party mandate key? What about the contest key? It’s reminiscent of a video game, or the novel Ready Player One.

It turns Professor Lichtman into a wizard: only he can turn the keys and prognosticate the result with one hundred percent certainty; for polls come and go, but the keys are hardened metal as old as time.

The 13 Keys Are Seemingly More Impressive Than Advertised

Lichtman likes to advertise the 13 Keys as having correctly predicted all ten elections since 1984. Maybe that’s an exaggeration, due to the issues I mentioned earlier. But did you know that the 13 Keys also correctly predicted the last thirty-eight elections before 1984?

Yes, they were invented in 1981. But during this process, Lichtman back-tested his model to “retrodict” every single contested election since 1860. And it gets all thirty-one of them correct.

Every single one.

Some Math

Hmm. Suppose the model has a 90% chance of calling an election correctly. That’d be incredibly good! Especially given the amount of close calls we’ve had — from JFK vs. Nixon to “Dewey Defeats Truman” to the 1880 popular vote which happened to come down to 2,000 votes.

Then that’s a 10% chance of an error. In 31 elections, you’d expect an average of 3.1 of them to be wrong. And the chance of getting them all right? That’s (0.9^31) = 3.8% chance, enough to pass the conventional “statistical significance” threshold.

So the keys are magic — or maybe there’s something fishy going on.

Interlude: The True Key to the White House

Did you know that, since 1980, the vice presidential candidate whose name comes first alphabetically always wins? It’s true! Kamala Harris precedes Mike Pence (2020), Mike Pence precedes Tim Kaine (2016), Joe Biden precedes Paul Ryan (2012), and so on. Just thought you should know this insane alpha.2

Nate Silver’s Explanations

In a 2011 article wittily titled “Despite Keys, Obama Is No Lock” (where I got a lot of my understanding from), Nate Silver plots all elections from 1860 to 2008:

On the y-axis, we’ve got the margin of victory in the popular vote. And on the x-axis, the number of keys that Lichtman scored in favor of the incumbent party.

(As a reminder, according to Lichtman, the incumbent party needs at least eight keys to win.)

This chart is crazy.

Behold, all the dots in the top right. Those represent all the popular-vote victories for the party in power. In these cases, because the Keys are magical, they always had at least eight keys. And behold their mirror image, those in the bottom left. In those, the incumbent lost, and because the Keys are magical, they had seven or fewer.

What stands out to me is that there is otherwise ALMOST NO CORRELATION between number of keys and margin of victory.

Like, look at the dot at (4, 0). That’s the 1960 election, between John F. Kennedy and Richard Nixon. JFK got 49.7% to Nixon’s 49.5%. Yet if you look at the Keys, you’d expect an absolute JFK landslide. Nixon (who is from the incumbent party) has a mere four keys!

Sure is incredible luck that no dots happened to appear in the top left or bottom right of the chart.

Nate Silver’s Commentary

Or … maybe it’s something other than luck. Although none of Mr. Lichtman’s keys are intrinsically ridiculous (for example, “which candidate had more ‘n’s in their name”), one can conceivably think of any number of other areas that might have been included in the formula but which are not — looking at how messy the primaries were for the opposition party, for example, or the inflation rate, or the ideological positioning of the candidates. (I mention these particular ones because there is some empirical evidence that they do matter.)

If there are, say, 25 keys that could defensibly be included in the model, and you can pick any set of 13 of them, that is a total of 5,200,300 possible combinations. It’s not hard to get a perfect score when you have that large a menu to pick from! Some of those combinations are going to do better than others just by chance alone.

(To get technical, Silver argues that Lichtman committed the statistical sins of overfitting and data dredging.)

Another Problem: Subjectivity

Perhaps you noticed, when you read the list of keys, that some of them weren’t exactly objective.

For example, “Charismatic incumbent.” This is a factor with strong predictive power. (Actually, in 2004, Paul Graham wrote an essay called “It’s Charisma, Stupid” that this might actually be the key factor that explained why Bill Clinton could win but John Kerry couldn’t. The theory has aged somewhat well; Barack Obama is generally considered more charismatic than Hillary Clinton.)

But who decides who is charismatic? Is it possible that we decide after the fact, based on who wins, that actually they were more charismatic?

Did people think, before Biden dropped out, that Kamala Harris was charismatic?

The 2024 election right now is a statistical tie — and yet I predict that, if Harris wins, she will be retroactively considered much more charismatic than if she loses.

One more Nate Silver quote:

It’s less that he has discovered the right set of keys than that he’s a locksmith and can keep minting new keys until he happens to open all 38 doors.

But Don’t the Keys Still Have a Pretty Good Track Record?

I’ve spent most of this newsletter criticizing the Keys. But I’m still a little impressed.

Indisputably true: Allan Lichtman used his 13 Keys to correctly predict the election results in 1984, 1988, 1992, 1996, 2004, 2008, 2012, and 2020.

Not bad — although some of those, like Reagan’s 1984 reelection and Clinton’s 1996 one, could be seen from a mile away. And was it much of a claim to suggest that any Republican would lose amidst the 2008 financial crisis?

But Lichtman’s Keys did call 2012 before Nate Silver. And he predicted 1988 while Bush was still losing to Dukakis in the polls. And with all the hindsight bias that goes on, 8 out of 10 (well, 9 out of 10 if you give Lichtman either 2000 or 2016 — he can’t have both) is pretty good!

I think I’ve been a little too dismissive of Allan Lichtman. The 13 Keys to the White House do provide us a valuable reminder: the election isn’t all about the campaign. You can have a killer debate and not move the polls by more than 1 percent. Much of it comes down to the fundamentals! To the short- and long-term economy, the major policy change, the social unrest, the scandal status, the foreign or military failure or success, the party mandate, the primary contest, whether the incumbent is seeking reelection, whether there’s a third party challenge, and which candidate is more charismatic.

Still, Lichtman Doesn’t Move Markets

Earlier this election season, Lichtman tallied up the keys and found that Joe Biden would win the 2024 election. There’s no key for “Incumbent party nominee is unable to string sentences together during a nationally televised debate.” There’s no key for “Incumbent party polls are plummeting” (that’s rather the point). There’s no key for “Party officials mount an increasingly strong pressure campaign to oust their president.”

You might think that last one would fall under “Primary contest” — but not according to Allan Lichtman, who gives the Democratic Party (both Biden and now Harris) a shiny key for their united front during primary season.

(Nate Silver disputes Lichtman’s application of the Keys, saying that when he uses them, he gets a Trump victory. This led to the memorable tweet from Lichtman about how only he could turn keys.)

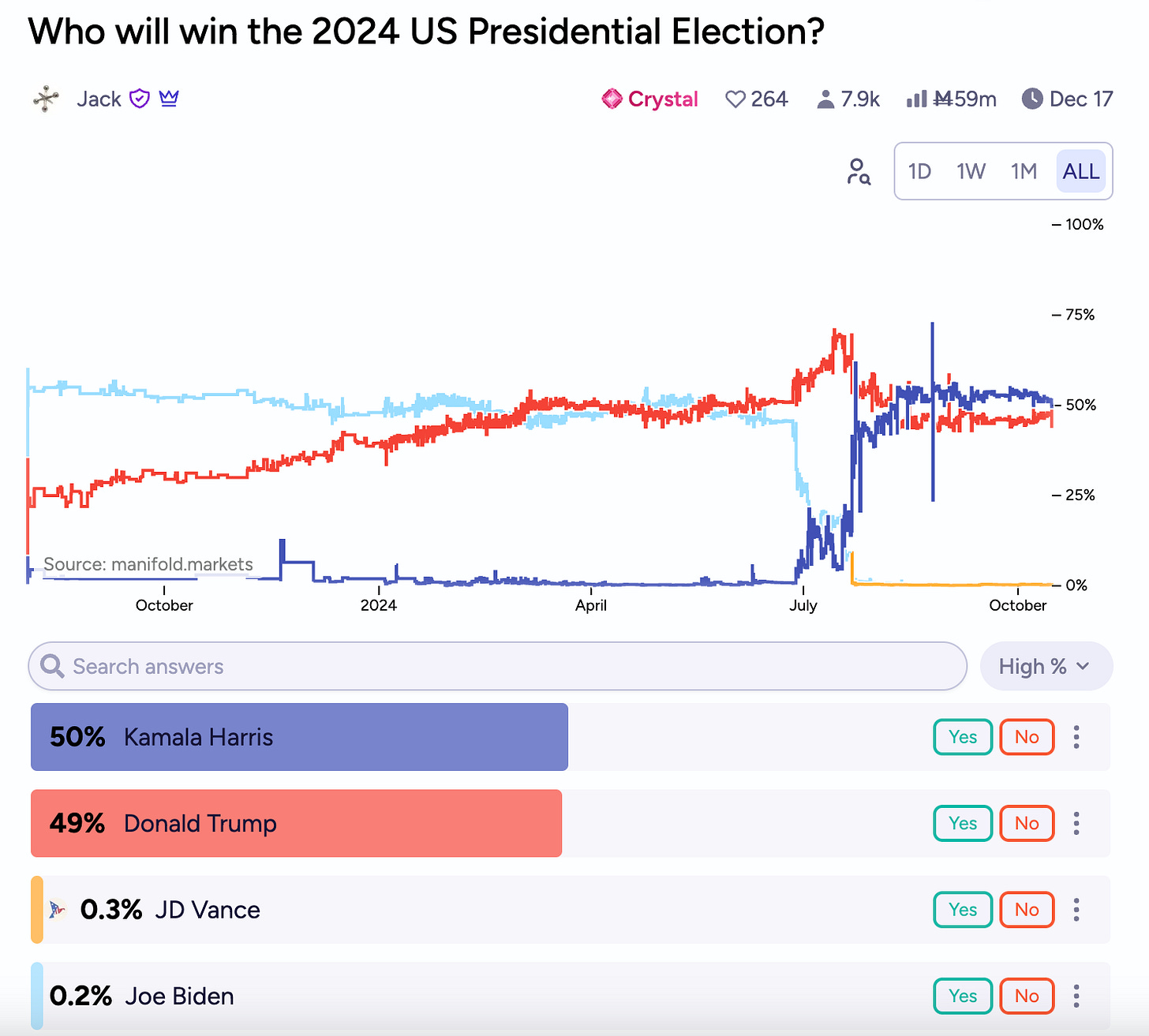

So when Lichtman pronounced that Biden had enough Keys, and later that Harris did, newspapers may have given breathless coverage. But our Manifold markets did not move! We show a nail-biter race.

Most of the Key-specific markets don’t have enough traders to be conclusive, but our hunch is that Lichtman’s at around a 60% chance to predict 20283, that the New York Times might give him media for it, and that if Harris wins the popular vote but loses the election, it’s more likely than not that Lichtman will pivot back to the claim that the Keys predict the popular vote.

That’s because Manifold’s top forecasters understand that, while the 13 Keys underscore real fundamental factors that impact elections, Allan Lichtman is not a wizard, and his keys do not have magical powers.

One Last Market

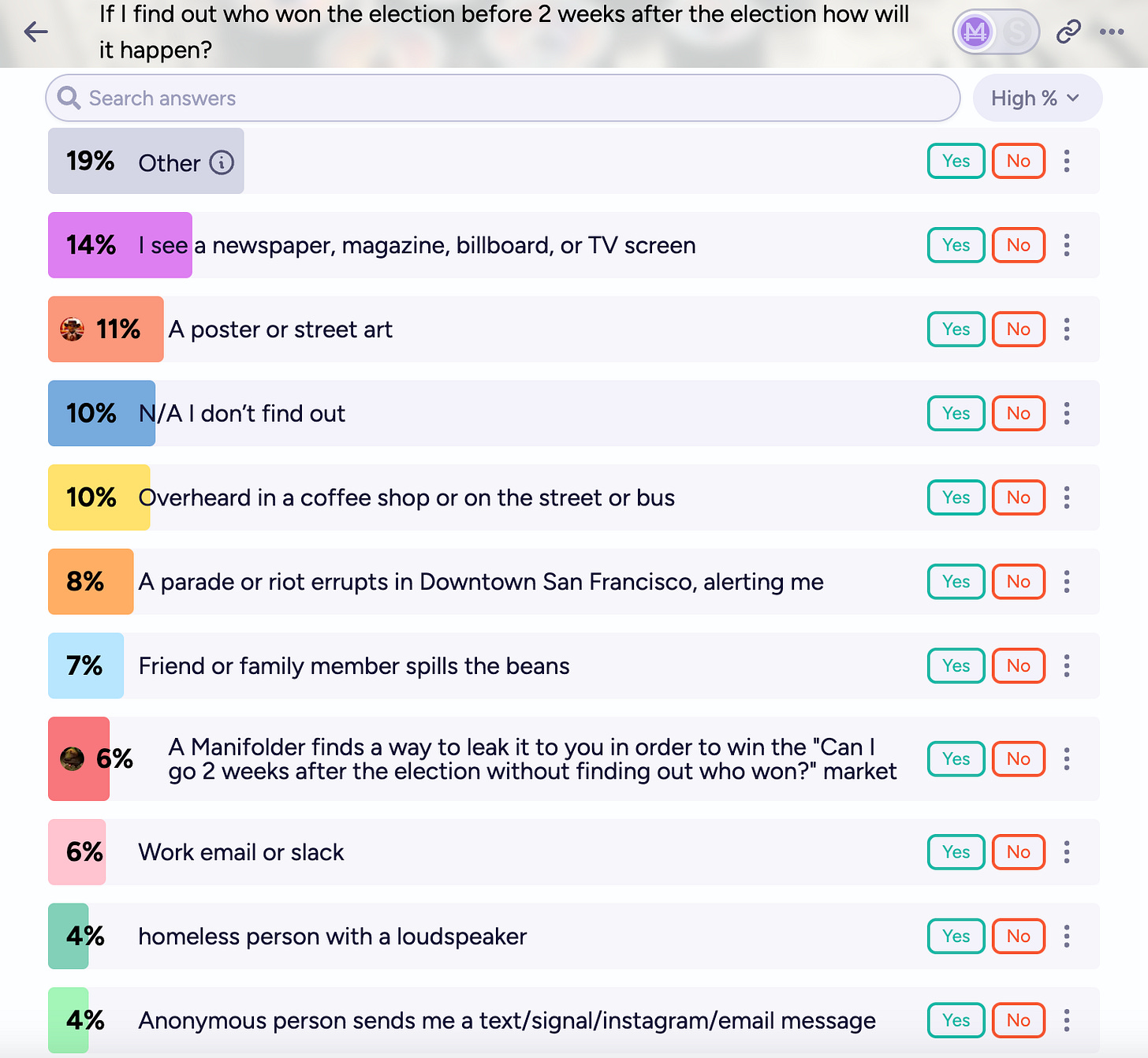

My tradition is to highlight a “fun” market, and this one was an easy pick. The creator, known as TBTBTB @tftftftftftftftftftftftf, has an extremely popular market “Can I go 2 weeks after the election without finding out who won?” I try to showcase less popular markets, so this is a derivative on how the creator will find out the election result:

As usual, I’ve given the market a M1,000 subsidy to encourage accuracy.

Finally, in honor of Allan Lichtman, I decided to borrow his format to summarize the flaws in his model.

The 13 Keys to Why the 13 Keys to the White House Are Silly

They’re clickbait.

Lichtman was wrong in 2000 when he used the Keys to predict Al Gore would be the next president.

After their creator Allan Lichtman announced the Keys predicted the popular vote, he got 2016 wrong by predicting Trump would win the popular vote.

Lichtman retroactively decided the Keys predicted the Electoral College based on “changed political demography,” even though he previously denounced changing his model on the fly.

The 13 Keys’ track record is … a little too good for a model made of metal.

There is a suspicious lack of correlation between number of keys and victory margin.

The model was likely made with the statistical errors of overfitting and data dredging.

The 13 Keys are written to be subjective enough that Lichtman can use them to predict whoever he wants.

He predicted Biden would win the 2024 election, and criticized Democrats calling for a candidate swap as a “foolish, self-destructive escapade” peddled by those with no experience forecasting elections.

Lichtman says the Keys predict a Harris victory, but Nate Silver interprets them to predict a Trump win.

While they are based on real political science concepts, at their best the Keys show the obvious.

Top Manifold traders are dismissive of the 13 Keys.

The 13 Keys predictions don’t move our markets!

(Top trader and almost-mod nikki posits a fourteenth key: The Forbidden Forecast is better.)

Thanks for reading, and see you next week. That’s right, Above the Fold: Manifold Politics is now planned to be weekly on Tuesdays, from today until Election Day! And you might get some bonus newsletters from David as well.

I’m Jacob Cohen (aka Conflux), a student at Stanford University. I blog at tinyurl.com/confluxblog, host puzzles at puzzlesforprogress.net, and don’t release any new episodes of the Market Manipulation Podcast on your favorite podcast platform. Check out Manifold Politics at manifold.markets/election, or via its Politics or 2024 Election categories. And if you enjoyed this newsletter, don’t forget to forward, like, share, comment, and/or subscribe to Above the Fold on Substack!

He also says that the election was stolen in Florida, so Gore should have rightfully won the Electoral College as well.

This model predicts a Trump victory, since JD Vance precedes Tim Walz. If Harris had picked Josh Shapiro she'd lose but more narrowly. Should've picked Andy Beshear… and Mitt Romney wouldn’t have saved us.

(Actually, this model doesn't map onto narrowness, since the incredibly narrow 1984 alphabetical result - George HW Bush vs. Geraldine Ferraro - was a landslide. Foreshadowing?)

(By the way, I also found a charming Politico article from 2016 with seven other forecasting methods ranging from voice depth to the Oscars.)

Plasma Ballin’ writes, “The system is nonsense, but it would have to be really, crazily bad to anticorrelate with the true results. So, >50% it is.”

I'm pretty sure Allan Lichtman wrote long before 2000 that his keys predicted the popular vote (in his formal write-ups at least, he may not have made it clear in the more clickbaity articles), so I'm willing to give him 2000 as a correct prediction and put his track record at 9/10. It's not that surprising that he has such a good track record because:

1) As mentioned in my comment in the footnote, they keys all plausibly correlate with the actual results, so we should expect them to be right more often than not. I think the probability that he gets the average election right is higher than the 60% chance our markets give of him being right in 2028 - that probability is brought lower by the fact that 2028 will likely be another close election and therefore harder to predict than average.

2) There's selection bias in which pundits become popular. If he had a bad track record, we never would have heard about him, and instead we would have heard of Nalla Tilchman whose 25 Snap Guns to the Oval Office model predicted the correct results of every election except 2020 (She now claims that it was actually only supposed to predict the winner of Ohio). If enough people try to predict elections, we're going to end up hearing about the ones who do well purely by chance.

What really annoys me though, is that Lichtman's main claim to fame is, "He's the guy who predicted Trump would win in 2016," when that was the one year that his model was wrong! And his model was much more wrong than, say, Nate Silver's prediction that Clinton would win the Electoral College - she was closer to winning the EC than Trump was to winning the popular vote, and Silver made his prediction with very low certainty, while Lichtman seems to have very high confidence in his model's predictions.

The other thing that annoys me is that major news organizations report on his model so uncritically, completely ignoring the rather egregious fact that he completely changed what his model predicts after 2016 with no plausible theoretical basis, and the fact that he lied about this (He has falsely claimed that he actually changed it before 2016). Even if we take the 13 Keys completely seriously, they only predict that Harris will win the popular vote, which isn't a very bold prediction - everyone expects that already.

> He also says that the election was stolen in Florida, so Gore should have rightfully won the Electoral College as well.

This is quite probably true (in the sense that Gore should have won, not that there was a conspiracy or outright fraud), but also, it doesn't really matter whether it was true or not. Either way, the election was so absurdly close that it was clearly impossible to predict by any method.