The Signal and the Denoiser

The Signal group chat scandal buries the lede on growing US-Iran tensions, and new diffusion models show breakthrough performance in Ghiblifying things.

👊🇺🇸🔥

It has been a shocking week since the Atlantic published, on Monday, an article documenting how its editor-in-chief, Jeffrey Goldberg, had been included in a group chat with the national security leaders of the United States regarding plans to attack targets in Yemen. After the administration downplayed the significance of the group chat, the Atlantic published further evidence of specific battle plans included in the messages, sent over the Signal app.

This has sent Washington into overdrive, with potential lawsuits and congressional oversight ramping up, and conflicting reports of the president’s ire directed at Mike Waltz and Pete Hegseth over responsibility for the snafu.

Manifold traders think it’s unlikely that anyone will face consequences for the group chat leak, placing the odds of a firing or resignation occurring at only 35%.

The odds of Waltz, Hegseth, or another member within the group chat itself facing consequences are even lower, implying that a staffer might end up taking the fall.

Manifold continues to view Defense Secretary Hegseth as the most likely to leave Trump’s cabinet first, followed by Tulsi Gabbard, the Director of National Intelligence. Both of these officials have come across poorly in the unfolding scandal.

However, the scandal may be masking a far more significant news story. The administration’s national security team appears to be actively thinking about how to position and sell the potential for a larger conflict with Iran to the American public.

Iran Tensions Rise

The US is staging serious military assets in the Indian Ocean, on a base on the Chagos Islands. A military campaign against Iranian proxies in Yemen might be a lead-up to a larger confrontation, some forecasters believe. The Oracle, Polymarket’s newsletter, has a deep dive into the potential for conflict with Iran, and Manifold traders agree with this possibility.

While an American strike against Iranian nuclear assets has long been a possibility, the odds of a bombing campaign on Iran have risen dramatically in the last week, settling at around 70%:

Despite this—and despite some heavy volatility—most traders think this will be limited to targeted actions and is unlikely to lead the US into a full-on war by the end of the year. 12% odds of a war between two of the largest militaries in the world still seems like it should be on the front page of every newspaper, mind you.

As for the military build-up in the Indian Ocean, traders remain skeptical (30%) that there is an imminent attack in the next two months being planned, perhaps considering a large show of force in Yemen or an implicit threat to Iran to restart nuclear negotiations (which currently seem unlikely to meet Trump’s two month deadline) the more likely scenario.

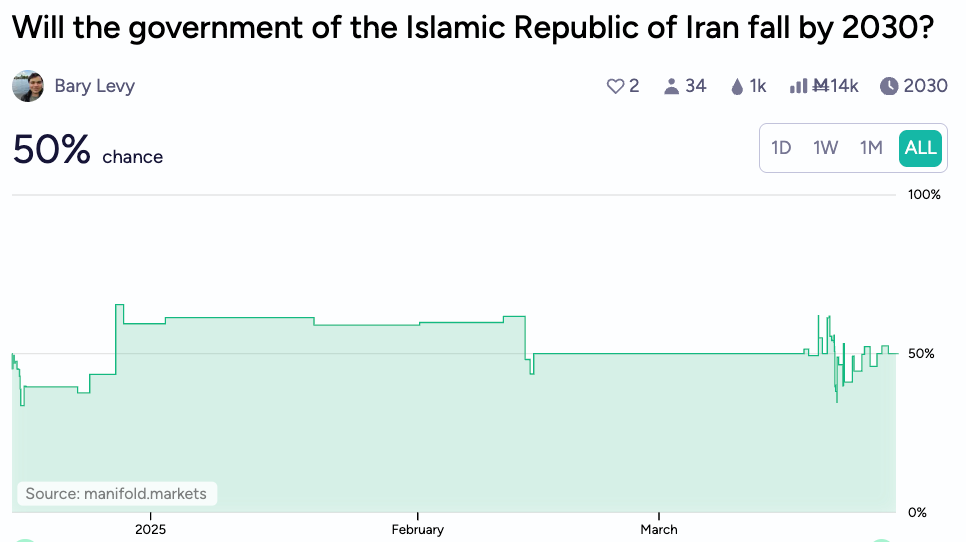

In general, Manifold views the situation in Iran as unpredictable, partly due to the instability of the current Iranian regime. Bettors think that there’s a coin flip’s odds that the Islamic Republic falls in the next 5 years, and 35% odds that the Ayatollah’s successor is selected by the end of the year.

The Ghiblification of Everything

The blistering pace of the AI race is perhaps best exemplified by two announcements on Tuesday. Google’s release of their new frontier model—hailed as the best model in the world—was quickly overshadowed by OpenAI’s update of native image generation through their 4o model. Despite the fact that Google Gemini has had similar image capabilities for a couple of weeks now, OpenAI’s package had a key advantage: it was transcendently competent at Ghiblifying images. It also, notably, allowed this despite IP concerns, unlike Google’s model (at least initially).

OpenAI is taking this gambit to (1) release capabilities that open themselves up to potential public pushback and (2) do so while simultaneously easing content restrictions, for an important reason. The Overton window for AI tools has shifted dramatically over the last couple months, under an administration unfavorable to regulatory constraints on AI outputs, and in view of a public distracted by more pressing news items. Regardless of their motivations, this will likely lead to more rapid capability growth in image generation, as top AI labs are demonstrating themselves less reluctant to push out models that are wont to create controversial images. A long-standing market on whether instant deepfakes will be available on demand by two years from now jumped by 20% on the OpenAI release, assumably not just on the capability advances, but on the perceived willingness by big labs to allow such prompts.

The new model can do more than just Ghiblify things. While formal benchmarks for image generation are not super widespread, and an Elo-based leaderboard seems to be more idiosyncratic in its rankings than LMArena’s chatbot leaderboard, users’ personal benchmarks are getting blown out of the water rapidly. OpenAI’s new native image generation can generate very concrete images, with much more coherent text, relationships between objects, and specific features.

For example, users have found it proficient at generating an image of a realistic chessboard, with the market jumping to 98% from 20% that any AI model could do that by the end of July.

A longtime Manifold user’s benchmark on whether AI image generation can reliably produce polygons—something you’d assume should be easy by now—initially soared on release. However, it has now fallen back down to Earth given uncertainty on whether, even with its new capabilities, OpenAI’s image generator can resist producing hexagons instead of pentagons and heptagons. I’ve long maintained that we should appreciate the hexagons we are given rather than wishing for other, inferior polygons, but perhaps it would be nice for us to be able to reliably produce pentagons on request.

Users are speculating on whether we’ll get something analogous to chain-of-thought for an image model, as well, giving fairly good odds to some sort of “visual scratchpad” by the end of the year:

Another market on whether image models will benefit from reasoning capabilities or inference-time scaling only has three traders currently, so perhaps you, the readers, can help determine whether this is likely to be relevant for diffusion models for image generation.

A reminder that Manifest 2025 tickets are now available, and you can start to bet on the happenings this June.

Happy forecasting!

-Above the Fold