Is This the End of Human Exceptionalism?

From OpenAI's IMO breakthrough to Manifold's Squid Games

Proof By Contradiction

The academic world has a funny habit of setting what it believes are immutable benchmarks of human intellect, only for technology to come along and politely move the goalposts. Chess was the thinking man’s game until Deep Blue made Kasparov look mortal in 1997. Go represented the ineffable complexity of Eastern thought until AlphaGo systematically dismantled Lee Sedol in 2016. Each conquest was preceded by confident assertions about what machines could never achieve, statements which are now perhaps best studied as artifacts of human overconfidence.

The latest casualty? The International Mathematical Olympiad. For those unfamiliar, the IMO isn't your typical high school math test. It's a six-problem, two-day gauntlet that brings together the world's most gifted teenage mathematicians to tackle problems that would make most PhD students weep.

These are problems that require what mathematicians euphemistically refer to as “creative insight,” which translates roughly to “the kind of intellectual leap that makes other mathematicians either genuflect or check your work very, very carefully.” For years, mathematical reasoning, particularly the creative problem-solving required for IMO problems, had consistently been AI's Achilles heel.

The prevailing skepticism surrounding machine mathematical capabilities wasn’t born out of pure pessimism. Studies published just months prior showed leading LLMs scoring under 5% on the USAMO (the US qualifying competition for IMO participation). Manifold user pietrokc, writing days before the announcement, documented their attempts at solving the released IMO questions using OpenAI's o3, describing the results as failing "miserably" along with reports of other top LLMs struggling with the published problems. The consensus among those who track such things had crystallized around a simple proposition: mathematical creativity would remain stubbornly human for the foreseeable future.

This consensus was further solidified by Terence Tao, who recently offered his assessment on Lex Fridman’s podcast: “It won’t happen this IMO. The performance is not good enough in the time period.” Tao, for those keeping track, is not typically wrong about mathematical capabilities.

Traders had particular reasons for their bearishness beyond general AI mathematical limitations. As Manifold user Bayesian noted, "There were 2+ combinatorics problems in the IMO 2025, which have historically been harder for AI, and the problems were pretty easy by human standards, so the threshold for gold was likely to be higher than usual."

This consensus lasted until Saturday.

OpenAI's latest experimental reasoning model just cracked the 2025 IMO problems with a performance that's both technologically revolutionary and, for anyone still clinging to the idea of uniquely human genius, a touch unsettling.

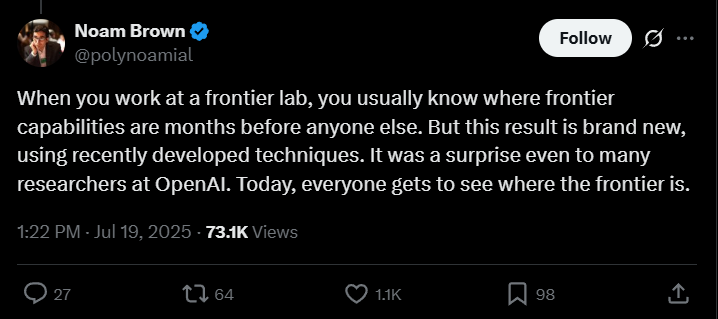

This development not only caught the broader AI community off guard but also the team at OpenAI itself.

The announcement triggered what traders described as significant market movement, though perhaps “whiplash” would be the more clinically accurate term. Months of increasingly bearish sentiment of AI mathematical capabilities reversed course with the kind of velocity typically reserved for markets on the Trump administration.

While last year’s silver-medal performance made traders hopeful, gold was still too far of a jump considering it was only excelling in certain domains of problems.

DeepMind’s announcement followed in quick succession, and Manifold users began to place bets on less prominent AI labs similarly achieving gold medal performance.

While we haven’t quite reached perfect-score territory yet, the finish line feels a lot closer than anyone had expected.

The goalposts, it turns out, are more mobile than we thought. And if AI can now scale the peaks of mathematical reasoning, then we might need to rethink what we consider uniquely human at all.

Endurance Markets

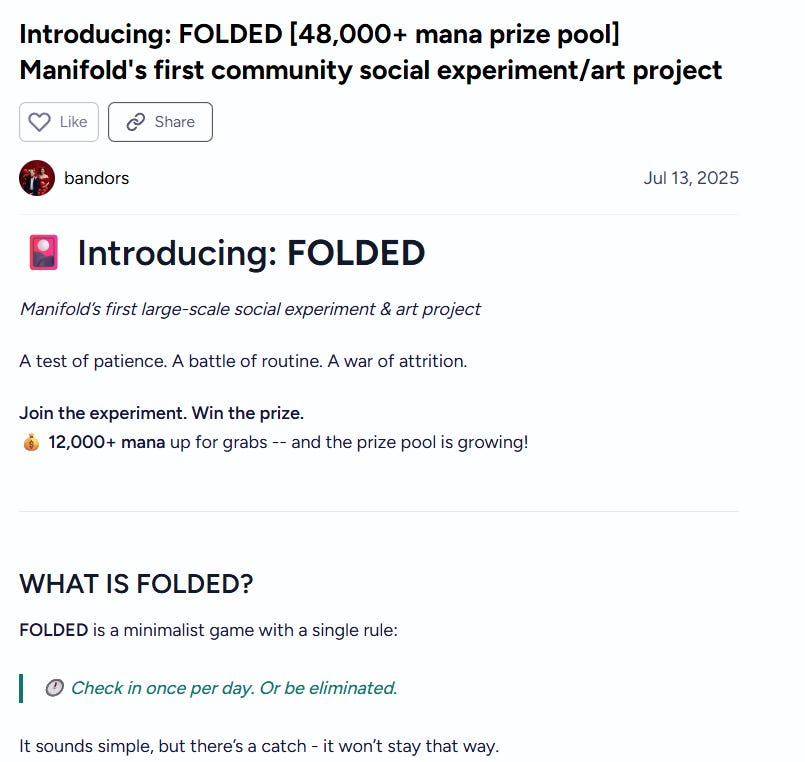

In the grand tradition of humans finding increasingly creative ways to torment themselves for internet points, Manifold has birthed Folded. It’s a social experiment which promises to be both creatively eccentric and psychologically scarring, with creator Bandors promising ever-escalating challenges that will separate the wheat from the chaff (or, more accurately, the obsessively punctual from the merely committed).

The premise is deceptively simple: check in once per day or be eliminated.

The creator has mentioned that check-ins will become more difficult over time, though the specifics remain deliberately vague. One imagines this is where the psychological engineering and sunk costs really begin. When pressed for details about the future challengers, creator Bandors adopted the stance of a pharmaceutical company’s side effects disclosure, hinting at riddles, puzzles, or possibly just making the check-in button progressively harder to find.

Because this is Manifold, the existence of Folded has spawned its own prediction ecosystem. Users are now betting on individual participants' chances of winning, whether anyone will be eliminated on the first day, and whether the prize pool will reach 100,000 Mana.

The comments section, predictably, devolved into a blend of game theory and performance anxiety. Players immediately began debating whether teams would be allowed (they aren’t, much to the dismay of Manifold user A), proposing laid-out cost-sharing arrangements, and meta-commentary with the kind of analysis typically reserved for actual economic policy.

Manifold user rerOid, displaying the kind of financial innovation that would make a derivatives traders weep in pride, created a market based on whether he’ll win the grand prize (and then bet against himself as a hedge). This is either brilliant risk management or a concerning level of self-doubt; frankly, both interpretations feel correct.

This enterprising user also offered “bootleg account activations” for 20 mana each with the stern caveat that “all sales are final.”

Another attempted to crowdfund their entry fee in exchange for a proportional share of potential winnings, complete with precise mathematical breakdowns of stake percentages and backup plans for tie-breaking scenarios.

When asked about mobile support, the creator proceeded to welcome them with open arms (“mobile users are cancer on the web; they ruined the internet”), a response that somehow pivoted into a broader lament about how Google’s SEO requirements killed forums as a medium for long-form discussion.

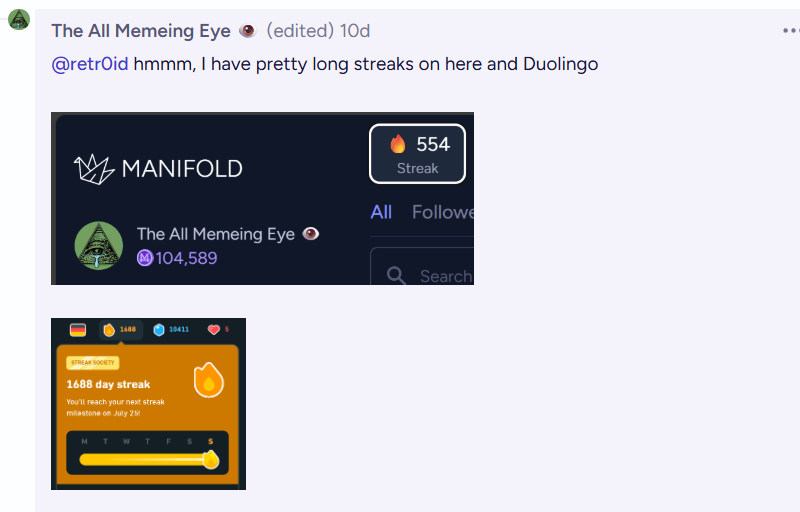

Meanwhile, prospective participants began marshaling evidence of their endurance gaming credentials (I wish I were joking), with one user posting screenshots of their 1000+ day Duolingo streak alongside their Manifold daily login record (highly recommended).

Another was worried about upcoming camping trips interfering with their participation, leading to a lengthy discussion of whether wilderness internet access constitutes a legitimate excuse for elimination.

Folded occupies a curious position at the intersection of several distinctly modern pathologies: the gamification of daily habits we’ve come to encounter to help with our post-COVID disastrous attention-spans, battle royale elimination mechanics, and the attention economy’s relentless demand for increasingly elaborate demonstrations of commitment.

Whether Folded succeeds as art, psychology, or entertainment (or a form of mental asylum) remains to be seen. But it has already succeeded in creating something distinctive to Manifold’s corner of the internet: intellectual, slightly unhinged, and possessive of that special quality that makes you unsure whether you’re witnessing a profound psychological experiment or an elaborate shitpost. Which, of course, is precisely what makes it worth watching.